AI-powered testing tools are revolutionizing software testing by automating repetitive tasks, improving test coverage, and accelerating release cycles. These tools use machine learning and advanced algorithms to adapt to application changes, predict potential issues, and suggest improvements, ultimately leading to a smarter and more proactive quality assurance process. While AI in testing still requires human oversight, it acts as an intelligent assistant that empowers QA teams to focus on more strategic tasks and deliver higher-quality applications with greater efficiency.

In this article, we’ll explore why QA teams need to adapt to AI-powered tools, how to assess your team’s readiness, and the best practices for integrating AI into your testing workflows.

Why QA Teams Need to Adapt to AI-Powered Tools

Traditional testing now faces several challenges, including:

- Complexity of Modern Applications – The rise of microservices architectures, dynamic user interfaces, and frequent updates has made manual test script maintenance labor-intensive and inefficient.

- Shortened Release Cycles – Continuous integration and deployment (CI/CD) pipelines require real-time testing insights and automation that can quickly adapt to changes.

- Resource Constraints – Manual test maintenance demands significant time and effort, diverting focus from strategic initiatives and innovation.

AI-driven test automation effectively addresses these challenges. AI accelerates testing by automating repetitive tasks and executing tests with minimal human intervention. AI-powered tools can prioritize the most critical test cases, eliminating redundant runs and optimizing resource utilization.

Additionally, ML-algorithms analyze historical test data to predict potential failures and enhance test coverage. AI also detects unstable tests, allowing teams to debug more efficiently and improve reliability. By leveraging AI-powered tools, QA teams can detect defects with greater precision, reduce human error, and elevate software quality.

The Benefits of Integrating AI into Testing Workflows

Self-Healing Tests. AI automatically updates test scripts when UI elements change, reducing maintenance efforts and minimizing downtime due to broken tests.

Intelligent Test Case Generation. AI analyzes application behavior and user interactions to generate relevant test cases, improving coverage and identifying edge cases that manual testing might overlook.

Predictive Analytics for Risk-Based Testing. AI identifies high-risk areas and prioritizes test execution, ensuring that critical issues are detected early in the development cycle.

Faster Test Execution and Optimization. AI tools run tests in parallel, optimize test suites, and eliminate redundant tests, significantly accelerating the QA process and improving CI/CD efficiency.

Scalability and Adaptability. AI-driven platforms seamlessly scale with project expansion, handling large test suites efficiently while reducing reliance on manual testing.

Broader Integration Capabilities. AI testing tools integrate with industry-standard platforms like Jira, GitHub, and Jenkins, enabling smooth workflow automation and better collaboration across teams.

Continuous Learning and Improvement. AI-powered tools continuously learn from past test results, refining their processes and improving accuracy over time. This self-learning capability ensures ongoing optimization of test execution and defect detection.

Improved Accuracy and Reliability. By minimizing human error, AI ensures more precise defect detection, enhances test reliability, and improves the overall quality of software releases.

Cost-Effectiveness and Flexibility. AI-powered testing allows teams to start small and scale gradually, integrating tools based on project needs. This approach makes AI-driven QA more accessible and cost-effective in the long run.

Key Capabilities of AI-Driven Testing Solutions

These capabilities enable QA teams to optimize test creation, execution, and defect management, making AI an essential component of modern testing strategies:

- Self-Healing Test Scripts. AI-driven self-healing test scripts automatically adapt to changes in an application’s UI or codebase, reducing maintenance efforts and increasing test stability.

Advantages of Self-Healing Tests:

- Automated Script Updates – When UI elements or workflows change, AI modifies test scripts to prevent failures.

- Reduced Maintenance Effort – Teams spend less time manually updating tests, allowing them to focus on high-value tasks.

- Greater Test Accuracy – AI improves test reliability by minimizing disruptions caused by evolving applications.

- AI-Driven Test Case Generation. AI automates the process of creating test cases by analyzing application code, user interactions, historical test data, and past defects. This ensures that test scenarios remain relevant and comprehensive.

How AI Enhances Test Case Generation:

- Expanded Test Coverage – AI identifies edge cases, boundary conditions, and negative test scenarios that manual testers might overlook.

- Automated Adaptation – AI adjusts test cases dynamically as new features are added or existing functionality changes, ensuring tests stay aligned with the latest application version.

- Optimized Efficiency – AI prioritizes critical application areas, balancing thorough testing with execution speed.

- Predictive Analytics for Test Execution. AI enhances test execution by analyzing historical test data to predict potential failures and optimize test selection. This reduces redundant testing and accelerates release cycles.

Key Benefits of Predictive Analytics:

- Intelligent Test Prioritization – AI dynamically adjusts testing strategies, ensuring high-risk areas receive greater attention.

- Resource Optimization – AI minimizes unnecessary test runs, reducing execution time and infrastructure costs.

- Parallel Execution Optimization – AI determines which tests can run concurrently and which should execute sequentially based on dependencies.

- Real-Time Failure Detection – AI pinpoints flaky tests and high-risk areas during execution, providing actionable insights for debugging.

- Defect Prediction and Root Cause Analysis. AI-based testing platforms predict defect-prone areas within an application by analyzing historical bug patterns, code changes, and prior test results. This helps teams proactively address potential issues.

How AI Improves Defect Management:

- Early Defect Prediction – AI highlights areas most likely to contain defects, allowing testers to focus efforts where they are needed most.

- Automated Root Cause Analysis – AI quickly identifies the source of a defect, whether it's faulty code, misconfigurations, or inconsistent test cases.

- Data-Driven Debugging – AI provides comprehensive insights into defect trends, helping teams improve long-term software quality.

- AI-Powered Continuous Testing in CI/CD Pipelines. AI seamlessly integrates with CI/CD pipelines, enabling continuous testing throughout the software development lifecycle. This ensures rapid feedback and high test reliability.

AI Capabilities in CI/CD Testing:

- Automated Test Execution – AI autonomously runs tests for every code update, identifying issues before deployment.

- Dynamic Adaptation – AI modifies testing strategies in real-time based on changes in the application.

- Accelerated Feedback Loops – AI quickly analyzes results, allowing teams to make data-driven decisions on test execution.

Assessing Your QA Team’s Readiness for AI Adoption in 3 Steps

You need to assess your team’s readiness before implementing AI.

Step #1. Identify Skill Gaps: Does your team understand AI and ML basics?

AI and machine learning are at the core of modern QA tools, enabling capabilities like predictive analysis, smart test selection, and flaky test detection. However, these technologies require a foundational understanding to be used effectively.

- Assess Current Knowledge: Determine whether your team is familiar with AI and ML concepts. Do they understand how these technologies can enhance testing processes?

- Provide Training: If gaps exist, invest in training programs to build foundational knowledge. Online courses, workshops, or even internal knowledge-sharing sessions can help bridge the gap.

- Encourage Curiosity: Foster a culture of learning by encouraging team members to explore AI tools and their potential applications in QA.

Step #2. Evaluate Current Processes: Are your workflows compatible with AI tools?

AI-powered tools are designed to enhance efficiency, but their effectiveness depends on how well they align with your existing workflows.

- Analyze Workflows: Review your current QA processes to identify areas where AI can add value. For example, are there repetitive tasks that could be automated? Are there bottlenecks in test maintenance or execution?

- Assess Tool Compatibility: Ensure that your existing tools and systems can integrate with AI solutions. Compatibility issues can hinder adoption and reduce the effectiveness of AI tools.

- Streamline Processes: Simplify and standardize workflows where possible. AI tools work best in well-structured environments, so eliminating unnecessary complexity will set the stage for smoother integration.

Step #3. Set Training Objectives: Define clear goals for AI adoption, such as reducing test maintenance or improving coverage

Adopting AI is not just about implementing new tools – it’s about achieving specific outcomes that enhance your QA processes.

- Define Clear Goals: Establish measurable objectives for AI adoption. For example, aim to reduce test maintenance time by 30%, improve test coverage by 20%, or decrease flaky tests by 50%.

- Align with Business Objectives: Ensure that your AI adoption goals align with broader business priorities, such as faster release cycles or higher software quality.

- Monitor Progress: Track key metrics to evaluate the impact of AI tools on your QA processes. Use this data to refine your approach and demonstrate the value of AI adoption to stakeholders.

Key Training Areas for QA Teams

AI Fundamentals for Testers: Teach Basic Concepts of ML, NLP, and AI in Testing. Your team needs a solid understanding of the underlying technologies.

- Machine Learning (ML) Basics: Introduce concepts like supervised and unsupervised learning, training data, and algorithms. Explain how ML can predict defects, optimize test coverage, and prioritize test cases.

- Natural Language Processing (NLP): Teach how NLP enables AI tools to understand and generate human language, which is particularly useful for testing conversational interfaces or analyzing user feedback.

- AI in Testing Context: Help testers understand how AI can enhance traditional testing processes, such as automating repetitive tasks, identifying patterns, and improving accuracy.

Hands-On Experience with AI Testing Tools: Conduct Workshops to Familiarize Teams with AI Testing Tools. Theory alone isn’t enough – your team needs practical experience with AI testing tools to build confidence and competence.

- Tool Selection: Choose popular AI-powered testing tools, which offer features like self-healing tests, visual testing, and smart test creation.

- Interactive Workshops: Organize hands-on sessions where team members can explore these tools, create test cases, and analyze results. Encourage experimentation and problem-solving.

- Real-World Scenarios: Simulate real-world testing challenges to help teams understand how AI tools can address specific pain points, such as flaky tests or dynamic UI changes.

Test Strategy Adaptation: Shift from Scripted Automation to AI-Driven Execution. AI-powered testing requires a different approach compared to traditional scripted automation.

- Dynamic Test Creation: Train teams to move away from rigid, scripted tests and embrace AI-driven tools that can adapt to changes in the application.

- Test Maintenance: Teach teams how AI tools can reduce maintenance efforts by automatically updating test scripts when the application changes.

- Risk-Based Testing: Encourage teams to use AI insights to prioritize high-risk areas, ensuring critical functionalities are tested thoroughly.

Interpreting AI-Generated Insights: Train Teams to Analyze AI Reports and Make Data-Driven Decisions. AI tools generate vast amounts of data, but their value lies in how well your team can interpret and act on these insights.

- Understanding AI Reports: Teach teams how to read and analyze AI-generated reports, including metrics like test coverage, defect predictions, and flaky test detection.

- Data-Driven Decision-Making: Encourage teams to use AI insights to make informed decisions, such as optimizing test suites, improving test coverage, or addressing recurring issues.

- Collaboration with Developers: Train testers to communicate AI insights effectively with developers, fostering collaboration and faster resolution of defects.

Best Practices for Effective AI Training

Implement Structured Training: Workshops, Online Courses & Certifications

A well-structured training program is the foundation of successful AI adoption.

- Workshops: Organize hands-on workshops where team members can explore AI tools in a guided environment. These sessions should focus on practical applications, such as creating AI-driven test cases or interpreting AI-generated insights.

- Online Courses: Leverage online learning platforms like Coursera, Udemy, or LinkedIn Learning to provide flexible, self-paced training on AI fundamentals, machine learning, and specific tools.

- Certifications: Encourage team members to pursue certifications in AI and machine learning. Certifications not only validate their skills but also boost their confidence in using AI tools effectively.

Foster Continuous Learning: Keep Teams Updated on AI Trends & Tools

Continuous learning ensures your team remains agile and adaptable in the face of evolving AI technologies. Staying updated is crucial for long-term success.

- Industry Resources: Share blogs, webinars, and whitepapers from industry leaders to keep your team informed about the latest AI trends and advancements in QA.

- Internal Knowledge Sharing: Foster a culture of learning by organizing regular knowledge-sharing sessions where team members can discuss new tools, techniques, and insights.

- Experiment with New Tools: Encourage your team to explore emerging AI tools and technologies. Allocate time for experimentation and innovation to keep your QA processes cutting-edge.

Empower AI Champions: Designate Leaders to Drive Adoption & Mentor Teams

AI champions act as catalysts, driving enthusiasm and ensuring a smooth transition to AI-powered testing.

- Identify Enthusiasts: Look for team members who are passionate about AI and willing to take on a leadership role in its adoption.

- Mentorship: Assign AI champions to mentor their peers, answer questions, and provide guidance on using AI tools effectively.

- Advocacy: Encourage AI champions to promote the benefits of AI adoption, share success stories, and address any concerns or resistance within the team.

Start Small: Pilot AI Tools on Limited Projects Before Scaling

Before rolling out AI tools across the board, start with pilot projects to evaluate their effectiveness and identify potential challenges.

- Select Low-Risk Projects: Choose small, low-risk projects to test AI tools. This minimizes the impact of any issues and allows your team to learn in a controlled environment.

- Set Clear Goals: Define measurable objectives for the pilot project, such as reducing test execution time or improving defect detection rates.

- Gather Feedback: Collect feedback from the team on the tool’s usability, effectiveness, and any challenges faced. Use this feedback to refine your approach before scaling up.

Overcoming Challenges in AI Test Adoption

Addressing Skepticism: Educate Teams on the Benefits of AI and Address Concerns About Job Displacement

One of the biggest barriers to AI adoption is skepticism and fear of change. Team members may worry about job displacement or doubt the effectiveness of AI tools.

- Highlight Benefits: Educate your team on how AI can enhance their work, such as reducing repetitive tasks, improving accuracy, and enabling them to focus on higher-value activities like exploratory testing and strategy.

- Debunk Myths: Address misconceptions about AI replacing human testers. Emphasize that AI is a tool to augment their capabilities, not replace them.

- Showcase Success Stories: Share examples of organizations that have successfully adopted AI in QA, demonstrating tangible improvements in efficiency and quality.

Managing the Transition: Gradually Integrate AI Tools into Existing Workflows to Avoid Disruption

A sudden shift to AI-powered testing can overwhelm teams and disrupt workflows. A gradual, phased approach is key to a smooth transition.

- Start Small: Begin by introducing AI tools for specific tasks, such as test case prioritization or flaky test detection, rather than overhauling the entire QA process.

- Provide Training: Ensure your team has the skills and knowledge to use AI tools effectively. Offer hands-on training and ongoing support to build confidence.

- Iterate and Improve: Gather feedback from the team and refine your approach as you go. This iterative process ensures that AI tools are integrated in a way that complements existing workflows.

Aligning with Business Goals: Ensure AI Adoption Supports Broader Objectives Like Faster Releases or Higher Quality

AI adoption should not be an isolated initiative. It must align with your organization’s broader business goals.

- Define Clear Objectives: Identify how AI can support key business priorities, such as accelerating release cycles, improving software quality, or reducing costs.

- Measure Impact: Track metrics like test execution time, defect detection rates, and test coverage to demonstrate the value of AI adoption to stakeholders.

- Collaborate Across Teams: Work closely with development, product, and business teams to ensure AI adoption aligns with their needs and contributes to overall success.

Measuring Success: How to Evaluate AI Training Effectiveness

To evaluate the effectiveness of AI training, you should track Key Performance Indicators (KPIs) that measure accuracy, efficiency, adaptability, and overall impact on testing workflows. Here are the most important KPIs:

1. Model Performance Metrics

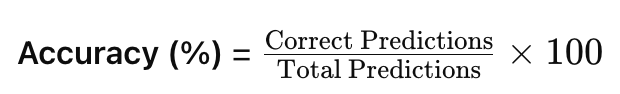

- Accuracy (%) – Measures how often AI predictions or test results match expected outcomes.

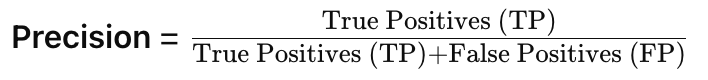

- Precision & Recall – Indicates how well the AI identifies relevant test cases and defects.

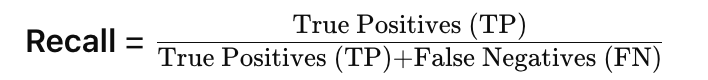

- F1 Score – A balance between precision and recall, crucial for evaluating AI efficiency.

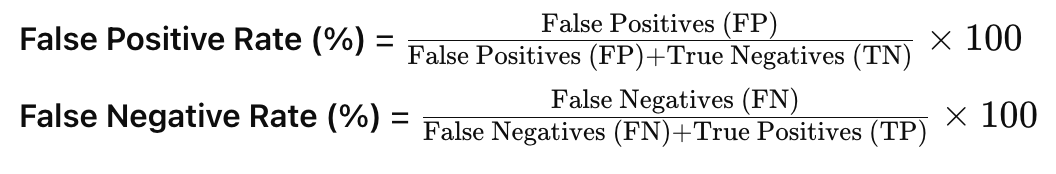

- False Positive/Negative Rate – Helps determine if the AI is over-reporting or under-detecting issues.

2. Test Execution Efficiency

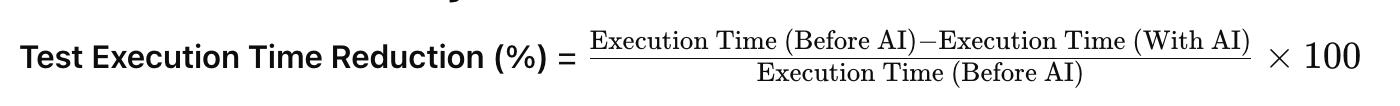

- Test Execution Time Reduction (%) – Compares AI-driven test execution speed to traditional methods.

- Automated vs. Manual Test Ratio – Tracks the percentage of tests executed by AI vs. manual testers.

- Test Maintenance Effort (Hours Saved) – Measures how much time AI saves by self-healing test scripts and reducing manual updates.

3. Defect Detection & Resolution

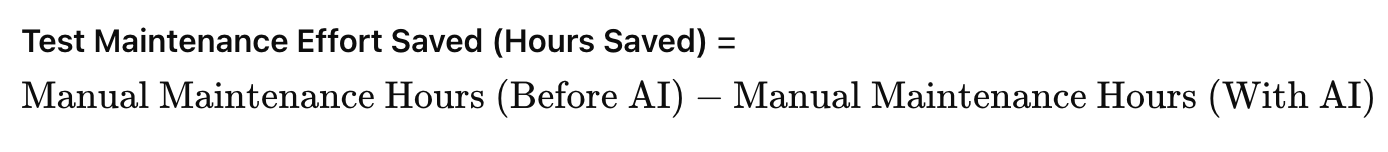

- Defect Detection Rate (%) – The percentage of total defects AI detects compared to human testers.

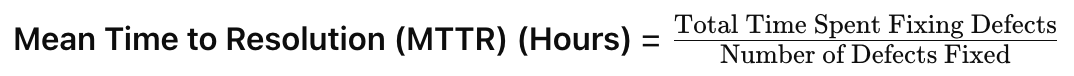

- Defect Resolution Time (MTTR - Mean Time to Resolution) – The average time required to fix AI-identified defects.

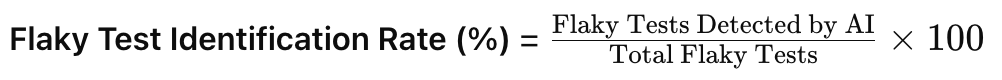

- Flaky Test Identification Rate (%) – How effectively AI detects unstable tests.

4. Test Coverage & Adaptability

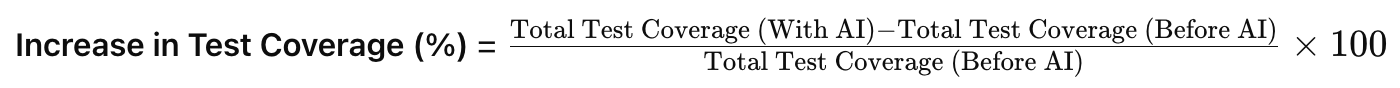

- Increase in Test Coverage (%) – Measures how much AI expands the number of scenarios and edge cases tested.

- Code Change Adaptation Time – How quickly AI adjusts test scripts after application updates.

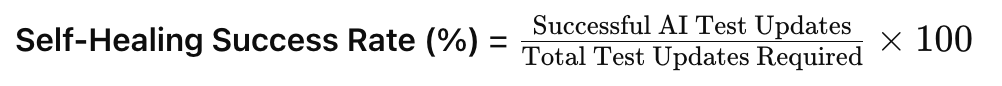

- Self-Healing Success Rate (%) – The percentage of tests AI successfully updates without human intervention.

5. Business Impact Metrics

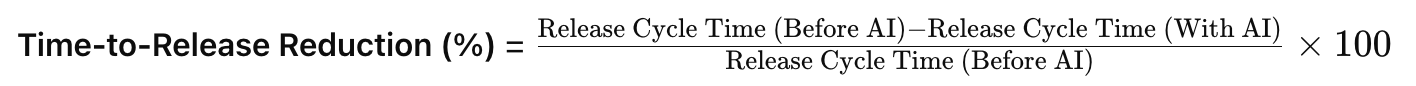

- Time-to-Release Reduction (%) – Tracks how AI contributes to faster software delivery.

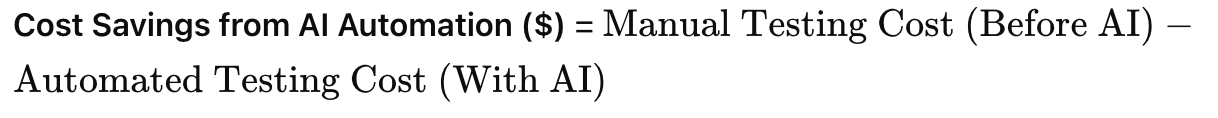

- Cost Savings ($) from AI Automation – Measures the reduction in testing costs due to automation.

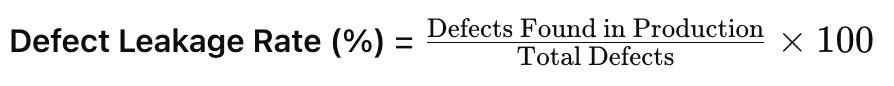

- Defect Leakage Rate (%) – The number of defects AI missed that made it into production.

Conclusion

AI is no longer a futuristic concept – it’s a reality that QA teams must embrace to stay competitive. By investing in AI training and adopting the right tools, your team can unlock new levels of efficiency, accuracy, and innovation in test automation.

The future of testing is AI-driven, and the time to prepare is now. Start small, experiment with tools, and empower your team to lead the charge toward smarter, faster, and more reliable software testing.

%20(1).png)